1.10. Permutation testing - more practice#

The aim of these exercises is to give you some practice running permutation tests and deciding what to permute and what your test statistic is in each case!

1.10.1. Set up Python libraries#

As usual, run the code cell below to import the relevant Python libraries

# Set-up Python libraries - you need to run this but you don't need to change it

import numpy as np

import matplotlib.pyplot as plt

import scipy.stats as stats

import pandas as pd

import seaborn as sns

sns.set_theme(style='white')

import statsmodels.api as sm

import statsmodels.formula.api as smf

import warnings

warnings.simplefilter('ignore', category=FutureWarning)

1.10.2. Import and view the data#

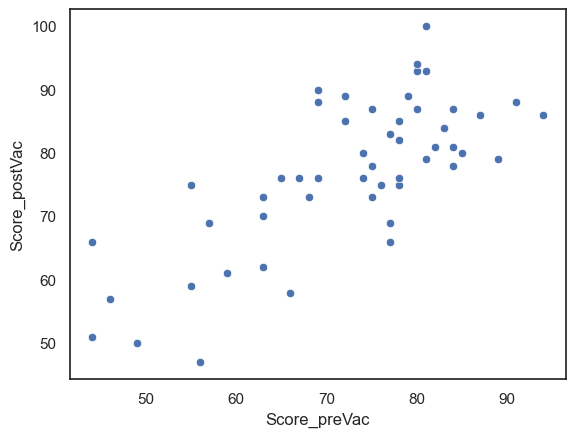

We will work with a fictional dataset containing wellbeing scores pre- and post the vacation for 300 Oxford students.

For each student was also have the following information:

subject studied

college

wb = pd.read_csv('https://raw.githubusercontent.com/jillxoreilly/StatsCourseBook_2024/main/data/WellbeingSample.csv')

wb

| ID_code | College | Subject | Score_preVac | Score_postVac | |

|---|---|---|---|---|---|

| 0 | 247610 | Lonsdale | PPE | 60 | 35 |

| 1 | 448590 | Lonsdale | PPE | 43 | 44 |

| 2 | 491100 | Lonsdale | engineering | 79 | 69 |

| 3 | 316150 | Lonsdale | PPE | 55 | 61 |

| 4 | 251870 | Lonsdale | engineering | 62 | 65 |

| ... | ... | ... | ... | ... | ... |

| 296 | 440570 | Beaufort | history | 75 | 70 |

| 297 | 826030 | Beaufort | maths | 52 | 49 |

| 298 | 856260 | Beaufort | Biology | 83 | 84 |

| 299 | 947060 | Beaufort | engineering | 62 | 65 |

| 300 | 165780 | Beaufort | PPE | 48 | 56 |

301 rows × 5 columns

1.10.3. 1. For engineering students, is wellbeing increased after the vacation compared to before?#

We hypothesise that engineering students will feel better after the vacation. Test the hypothesis using a permutation test.

First let’s remind ourselves how to get just the rows of the dataframe containing engineering students:

wb.query('Subject == "engineering"')

| ID_code | College | Subject | Score_preVac | Score_postVac | |

|---|---|---|---|---|---|

| 2 | 491100 | Lonsdale | engineering | 79 | 69 |

| 4 | 251870 | Lonsdale | engineering | 62 | 65 |

| 6 | 841260 | Lonsdale | engineering | 71 | 58 |

| 7 | 960120 | Lonsdale | engineering | 54 | 54 |

| 15 | 670880 | Lonsdale | engineering | 70 | 69 |

| ... | ... | ... | ... | ... | ... |

| 266 | 842870 | Beaufort | engineering | 52 | 58 |

| 278 | 414020 | Beaufort | engineering | 76 | 82 |

| 291 | 384240 | Beaufort | engineering | 78 | 89 |

| 294 | 457900 | Beaufort | engineering | 72 | 62 |

| 299 | 947060 | Beaufort | engineering | 62 | 65 |

61 rows × 5 columns

Things you need to decide:#

what is our null hypothesis

what is our alternative hypothesis?

Is it a paired or unpaired test?

therefore which

permutation_typeis needed,samples,pairingsorindependent?are we testing for the mean difference (within pairs)

mean(x-y), or the difference of meansmean(x)-mean(y)what actually are

xandyin this case?

Is it a one- or two-tailed test?

therefore which

alternativehypothesis type is needed,two-sided,greaterorless?

What \(\alpha\) value will you use?

what value must \(p\) be smaller than, to reject the null hypothesis?

this is the experimenter’s choice but usually 0.05 is used (sometimes 0.001 or 0.001)

The answers to the things you need to decide!#

\(\mathcal{H_0}\): The mean difference in wellbeing post-pre vacation is zero for engineering students

\(\mathcal{H_a}\): The mean difference in wellbeing post-pre vacation is greater than zero for engineering students, ie wellbeing improves over the vacation.

This is a paired test as we are comparing each person’s wellbeing before the vacation to their own wellbeing after the vacation. Therefore we are testing the mean difference mean(x-y) and need to shuffle the samples (flip pre and post labels with pairs, but don’t break the pairings)

# define a funtion that gives the mean difference for two series x and y

def mDiff(x,y):

return np.mean(x-y)

It’s a one-tailed test because our hypothesis is directional (we expect wellbeing to increase after the vacation) and if we calculate of difference as post-pre, a significant effect would be a big positive difference, so we need alternative='greater'

We will test at the 5% (\(\alpha=0.05\)) level

Run the test!#

Here is some code to run the test

post = wb.query('Subject == "engineering"').Score_postVac

pre = wb.query('Subject == "engineering"').Score_preVac

stats.permutation_test((post,pre), mDiff, alternative='greater', permutation_type='samples', n_resamples=10000)

PermutationTestResult(statistic=1.0491803278688525, pvalue=0.21377862213778623, null_distribution=array([ 0.45901639, -2.59016393, 0.03278689, ..., -0.72131148,

0.32786885, -0.91803279]))

Is it significant?#

Reading the test output:

statistic=1.0491803278688525

The mean post-pre wellfare score difference in our ‘real’ sample was 1.049 points (welfare was 1.049 points higher after the vac - this is a small increase as it’s a 100-point scale)

pvalue=0.214

The probability of obtaining such a large increase in welfare due to chance if there was really no effect of the vacation is 21.4%

Conclusions#

We fail to reject the null hypothesis as \(p\) is not smaller than \(\alpha\)

How would you write that up in a journal article?#

We hypothesised that wellbeing increased over the vacation. We compared wellbeing in engineering students (n=61) before and after the vacation using a paired samples permutation test. There was no significant increase in wellbeing after the vacation (mean difference is an increase of 1.049 points, p=0.214, one tailed).

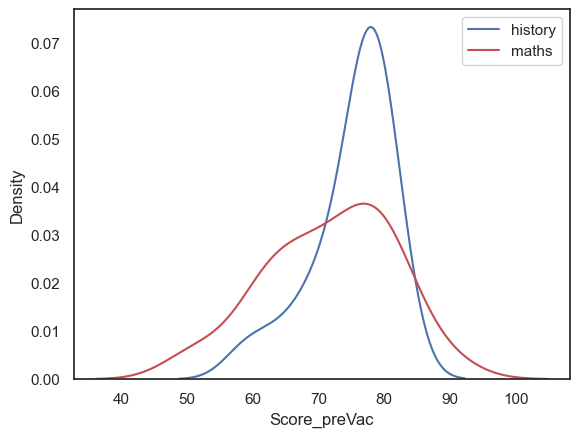

1.10.4. 2. Do does wellbeing (before the vacation) differ between history and maths students?#

We hypothesise that students studying different subjects may have different levels of wellbeing but we don’t have an a priori expectation about which subjects will have higher wellbeing.

Test the hypothesis using a permutation test.

Let’s get just the prevacation wellbeing scores for history students and just maths students:

history = wb.query('Subject == "history"').Score_preVac

maths = wb.query('Subject == "maths"').Score_preVac

Things you need to decide:#

what is our null hypothesis

what is our alternative hypothesis?

Is it a paired or unpaired test?

therefore which

permutation_typeis needed,samples,pairingsorindependent?are we testing for the mean difference (within pairs)

mean(x-y), or the difference of meansmean(x)-mean(y)what actually are

xandyin this case?

Is it a one- or two-tailed test?

therefore which

alternativehypothesis type is needed,two-sided,greaterorless?

What \(\alpha\) value will you use?

what value must \(p\) be smaller than, to reject the null hypothesis?

this is the experimenter’s choice but usually 0.05 is used (sometimes 0.001 or 0.001)

The answers to the things you need to decide!#

\(\mathcal{H_0}\): The difference mean wellbeing pre vacation for history and maths students is zero

\(\mathcal{H_a}\): The difference mean wellbeing pre vacation for history and maths students is non-zero, ie one subject has higher wellbbeing

This is an independent samples test as we are comparing each person’s wellbeing before the vacation to their own wellbeing after the vacation. Therefore we are testing the mean difference mean(x)-mean(y) and need permutation_type = 'independent' (shuffle which datapoints are labelled ‘history’ or ‘maths’ but keep the number of students labelled as history and maths matching the original sample)

# define a funtion that gives the mean difference for two series x and y

def dMeans(x,y):

return np.mean(x)-np.mean(y)

It’s a two-tailed test because our hypothesis is non-directional (we don’t have particular predictions about which subject we might have higher wellbeing, we are just interested in whether there is a difference) so we need alternative=’two-sided’

We will test at the 5% (\(𝛼=0.05\)) level

Run the test!#

Here is some code to run the test:

stats.permutation_test((history,maths), dMeans, alternative='two-sided', permutation_type='independent', n_resamples=10000)

PermutationTestResult(statistic=3.486388384755003, pvalue=0.18018198180181982, null_distribution=array([3.83484574, 0.69872958, 0.35027223, ..., 2.87658802, 1.56987296,

3.92196007]))

Is it significant?#

Reading the test output:

statistic=3.486388384755003

The difference in mean welfare score in our ‘real’ sample was 3.49 points (welfare was 3.49 points higher for history students)

pvalue=0.16718328167183283

The probability of obtaining such a large difference in welfare due to chance if there was really no effect of the subject is 16.7%

Conclusions#

We fail to reject the null hypothesis as \(p\) is not smaller than \(\alpha\)

How would you write that up in a journal article?#

We hypothesised that wellbeing might differ between students in different subjects. We compared wellbeing in maths students (n=29) and history students (n=19) before the vacation using an independent samples samples permutation test. There was no significant difference in wellbeing between subjects (difference of means = 3.48 points, p=0.167, two tailed).

Note#

If we plot the distribution of wellbeing in the two subjects, we can see taht there is a lot of overlap

sns.kdeplot(history, color='b')

sns.kdeplot(maths, color='r')

plt.legend(['history','maths'])

plt.show()